Google DeepMind is advancing the capabilities of its Gemini models in addressing intricate challenges through multimodal reasoning that encompasses text, images, audio, and video. However, these capabilities have primarily been limited to the digital environment. For artificial intelligence to be truly beneficial and effective in the physical world, it must exhibit “embodied” reasoning—the human-like capacity to understand and respond to the surrounding environment—while also safely executing actions to achieve objectives.

They are introducing two new AI models based on Gemini 2.0, which lay the foundation for a new generation of helpful robots.

First up is Gemini Robotics, an innovative vision-language-action (VLA) model developed from Gemini 2.0, which now includes physical actions as a new way to directly control robots. Next is Gemini Robotics-ER, a version of the Gemini model that boasts enhanced spatial understanding, allowing roboticists to execute their programs by leveraging Gemini’s embodied reasoning (ER) capabilities.

These two models allow a diverse array of robots to tackle a broader spectrum of real-world tasks than ever before. In our ongoing efforts, we are collaborating with Apptronik to create the next generation of humanoid robots, known as Gemini 2.0. Additionally, we are engaging with a select group of trusted testers to shape the future of Gemini Robotics-ER.

We are excited to delve into the potential of our models and to keep advancing them towards practical applications in the real world.

Gemini Robotics: Our most advanced vision-language-action model

Gemini Robotics utilizes Gemini’s comprehensive understanding of the world to adapt to new situations and tackle a broad range of tasks right from the start, even those it hasn’t encountered during training. It excels at handling unfamiliar objects, varied instructions, and different environments. In our technical report, we demonstrate that, on average, Gemini Robotics achieves over twice the performance on an extensive generalization benchmark when compared to other leading vision-language-action models.

Interactivity

To function effectively in our physical world, robots need to interact smoothly with people and their environment while quickly adapting to new situations.

Gemini Robotics, built on the Gemini 2.0 platform, is designed for intuitive interaction. It utilizes Gemini’s advanced language comprehension skills, allowing it to understand and respond to commands given in everyday conversational language, including various languages.

This model can interpret a wider range of natural language instructions compared to earlier versions, adjusting its actions based on your input. It also constantly observes its environment, identifies changes, and modifies its behavior as needed. This level of control, or “steerability,” enhances collaboration between people and robot assistants in various settings, from homes to workplaces.

If an object slips from its grasp or someone moves an item around, Gemini Robotics quickly replans and carries on — a crucial ability for robots in the real world, where surprises are the norm.

Dexterity

The third essential element for creating a useful robot is its ability to move skillfully. Many simple tasks that people do easily need a high level of fine motor skills, which robots still struggle with. However, Gemini Robotics can handle very complicated, multi-step activities that demand precise movements, like folding origami or packing a snack into a Ziploc bag.

Multiple Embodiments

Gemini Robotics is versatile and can work with various types of robots due to its flexible design. While we mainly trained the model using data from the bi-arm robotic platform ALOHA 2, we also showed that it can manage a bi-arm setup using Franka arms, which are commonly found in academic settings. Additionally, Gemini Robotics can be tailored for more advanced models, like the humanoid Apollo robot created by Apptronik, aiming to perform tasks in real-world scenarios.

Gemini Robotics-ER is capable of handling all the essential tasks for robot control straight from the start. This includes perception, estimating the robot’s state, understanding its surroundings, planning, and generating code. In this complete setup, the model shows a success rate that is 2 to 3 times higher than that of Gemini 2.0. Additionally, when code generation alone isn’t enough, Gemini Robotics-ER can utilize in-context learning, mimicking a few human demonstrations to find a solution.

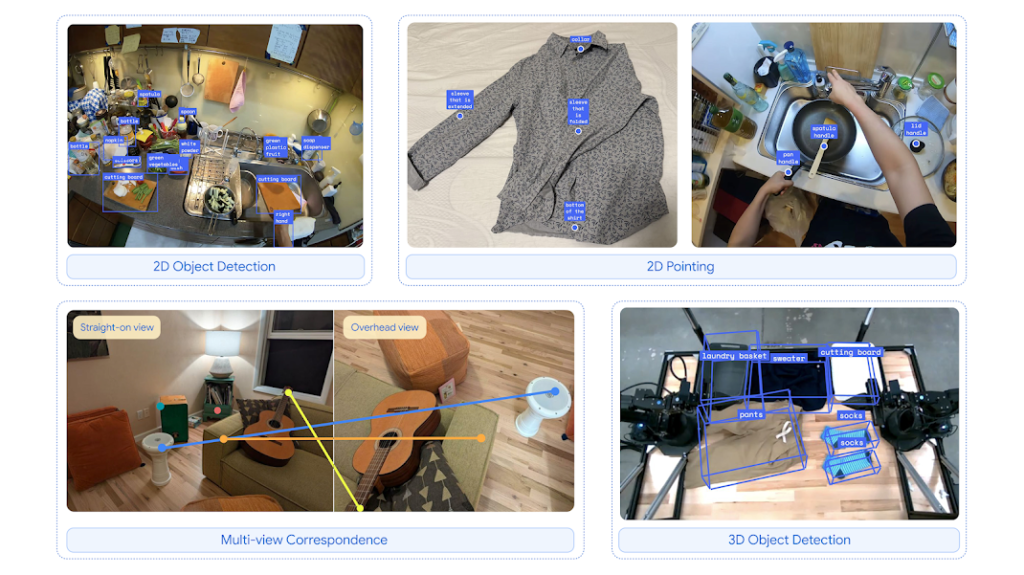

Gemini Robotics-ER excels at embodied reasoning capabilities including detecting objects and pointing at object parts, finding corresponding points, and detecting objects in 3D.

Responsibly advancing AI and Robotics

As we investigate the ongoing possibilities of AI and robotics, we are adopting a comprehensive approach to ensure safety in our research, covering everything from basic motor control to an advanced understanding of meaning.

The safety of robots and the people nearby has always been a key concern in robotics. This is why roboticists implement traditional safety measures, such as preventing collisions, controlling contact forces, and maintaining the stability of mobile robots. Gemini Robotics-ER can connect with these essential low-level safety controllers tailored to each specific design. By enhancing Gemini’s fundamental safety features, we allow Gemini Robotics-ER models to assess whether an action is safe in a particular situation and to respond accordingly.

For more daily updates, please visit our News Section.